AI models have seen some of the most noteworthy changes in the tech industry over the last few years. With the rise of generative AI, powered by large language models and autonomous agents, we’ve seen companies optimize their workflows in ways that seem straight out of futuristic comic books.

However, alongside the changing software, hardware has also adapted to keep up. There have been many notable AI hardware trends in 2026, many of which we’ll go over in this article so that you can better understand the possibilities of AI innovation, the restrictions you might face in the adoption of new technology, and where there might be a market gap.

If you want to experiment with artificial intelligence, you are in the right place. Our highly specialized developers at Trio have many years of experience and can help you with everything from developing the initial versions of your AI applications to integrating AI systems with your legacy software.

Our outsourcing and staff augmentation models mean you don’t need to worry about the resources consumed through locating and onboarding developers. Instead, you get the perfect fit within a couple of days, and you only need to pay for the services you use.

The Surge of Custom AI Chips in the 2026 Hardware Revolution

AI chips are probably one of the biggest developments. Although they don’t necessarily fall into 2026 exclusively, with many major developments in 2025 or well before, we’ve seen recent advancements that will affect many large tech companies worldwide.

The Rise of AI Reasoning & Specialized Silicon

You might be wondering, Why are custom AI chips so hyped? Surely they aren’t so revolutionary? Well, you might not have been far off a few years ago. But, as AI tools have continued to evolve, they have started to push general-purpose chips like CPUs and GPUs to their limits. In other words, software has developed as far as it can with our existing hardware.

So, the only way to allow models to advance further is to create something more powerful.

NPUs (Neural Processing Units), TPUs (Tensor Processing Units), and AI accelerators are all highly specialized hardware components now made of silicon.

These custom silicon chips are usually designed to handle specific tasks like training AI models (such as LLMs), real-time inference, and AI reasoning. The custom silicon allows for faster processing, lower latency, and even reduced energy consumption, making it a strategic asset for enterprises and cloud providers, especially those focused on sustainable development.

This means that these chips will help us shift towards further AI development, such as advanced reasoning and the ability to adapt.

Nvidia, AMD & Emerging Players: Who’s Winning the Chip War

Nvidia is definitely still at the forefront of the AI revolution, thanks to its H100 Tensor Core GPU and Blackwell architecture. AMD is also a key player and is quickly gaining ground in the accelerator and chip market with its MI300 series. The price-to-performance ratio they have been able to provide is absolutely mind-blowing.

There are a bunch of other small companies that are also making headlines, like Cerebras, Graphcore, and SambaNova, so it will be interesting to see where the two major players are in the next few years. Cerebras’ wafer-scale engine, for example, is the largest chip ever built and excels at massive-scale model training.

Some companies, especially those with larger budgets, have decided to work on their own chips. This decision has partly been made to reduce their reliance on third-party vendors. Some successful examples include Google’s TPU v5p and AWS Trainium.

The Impact of Quantum and Neuromorphic Chips on AI Hardware

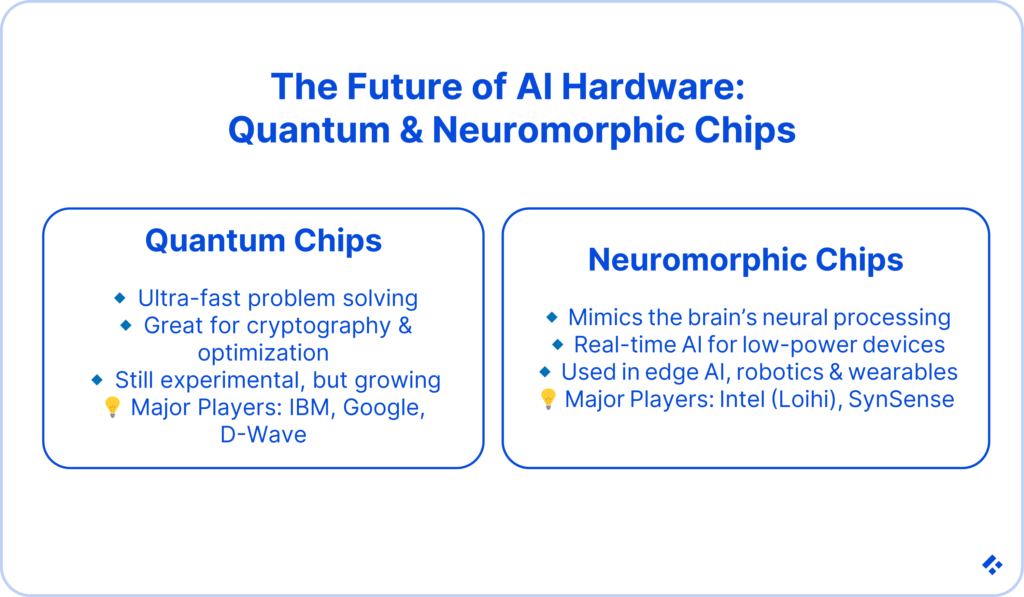

Quantum computing is one of the many tech trends to watch this year. It isn’t anywhere close to mainstream yet, but we’ve seen a lot of progress in quantum processors, and investments only continue to grow as companies realize the role they would play in AI optimization tasks such as reinforcement learning and cryptographic analysis.

Hybrid AI-quantum systems are also impacting AI advancements, as big companies like IBM focus their efforts in this sector.

Neuromorphic chips are also part of the shift toward more human-like AI. They are based on he brain’s neural structure and are being used a fair bit in edge AI applications thanks to their ultra-low power consumption and real-time processing, like in the case of Intel’s Loihi. These chips may allow for AI integration in vehicles, smart sensors, and wearables.

Cloud vs Edge: Where AI Workloads Will Live

We’ve mentioned edge AI. But what exactly is it, and why is it an important part of AI infrastructure? In short, edge AI works on a device without an internet connection, while cloud relies on centralized cloud servers or remote data centers.

Edge AI is faster and more secure, making it a great tool that allows AI to be used across industries where these factors might be a concern.

Hyperscalers’ Strategy: Cloud Migrations & AI Workloads

Enterprises are looking for environments that are scalable and high-performance, so that they can continue to train and deploy AI models effectively. Cloud giants in particular, like AWS, Azure, and Google Cloud, are really doubling down on the hosting that they offer.

Hyperscalers – as these companies are sometimes known – are providing end-to-end AI platforms that offer everything from specialized hardware to any tools that developers may need, and even entire pre-trained models. This attracts enterprises that want to find easier ways to build and deploy AI solutions.

Taking the same cloud platforms we’ve just mentioned as examples, Amazon SageMaker, Azure AI Studio, and Google Vertex AI are being bundled with custom silicon to create sticky ecosystems.

The Return of the Data Center Arms Race

All the AI demand in recent years has meant we’ve also made changes to hardware, as discussed above. These advancements, along with the demand for new AI processing power, mean that enterprises and other service providers are trying to build or find data centers equipped with the latest AI hardware.

According to Deloitte, global data center capacity is expected to double by 2027! Top trends driving this forward include the increased demand for AI. Of course, this increase in data centers means an increase in overall global data center electricity consumption.

As an added bonus, these new data centers can prioritize energy-efficient AI use, modular scalability, and long-term sustainability. There have already been a myriad of innovations in this sector, including liquid cooling, advanced power management systems, and AI-optimized rack designs.

The rest of 2026 will see these developments becoming industry standards, and will probably bring even more changes that data centers will be able to incorporate to become more efficient.

Local AI & On-Device Processing Boom

We’ve already mentioned the differences between edge and cloud computing above. The reality is that not all AI tasks need to be carried out in the cloud. “Local AI” or edge AI is becoming incredibly popular, especially in smartphones, wearables, and autonomous vehicles, which incorporate on-device AI hardware.

The reality is that online AI deployments are open to more risks in terms of data. They also take incredible bandwidth usage while still struggling with latency issues unless conditions are absolutely perfect. Having local AI overcomes all of these issues.

Apple’s A18 Bionic, Google’s Tensor G4, and Qualcomm’s Snapdragon X80 are all great examples of big companies moving towards running processes locally to ensure efficient AI. These all integrate specialized NPUs that support local image recognition, language translation, and predictive text.

The Battle of Cost and Efficiency: AI Hardware Economics

We’ve gone over the sustainability considerations many companies are making when building AI hardware like data centers. But this tends to be more costly initially. Many are coming to terms with this increased cost in favor of sustainability and efficiency, and how might this reshape industries? Let’s take a look.

Rising Infrastructure Costs & Strategic Responses

Large-scale AI models are driving up infrastructure costs. It can take millions of dollars to train a single large language model, especially when you are trying to train it with as much data as possible. The final results are amazing; however, it does mean that startups and smaller companies with limited budgets experience a massive barrier to entry.

In order to get the most out of AI in 2026, companies are experimenting with strategies like model compression, efficient hardware utilization, and collaborative open-source initiatives. We’ve also seen a rise in multi-vendor hardware strategies and decentralized AI in an effort to reduce costs.

Making AI More Resource-Efficient: Hardware’s Role

Outside of more sustainable data centers, there are many other ways AI hardware can be made more efficient. Techniques like pruning, quantization, and sparsity optimization are all being used at the silicon level of custom AI chips. The goal of these techniques is to reduce computational load without negatively affecting the performance of the AI model.

High Bandwidth Memory (HBM3) and CXL (Compute Express Link) architectures are also some common memory technologies that might improve AI data transfer, one of the biggest energy consumers in the AI usage process.

Open-Source Hardware

Open-source AI hardware initiatives, such as RISC-V, are allowing the AI market to turn away from traditional ways of sourcing chips, drastically lowering the barrier to entry in this industry. They provide everyone with access to customizable hardware designs and foster global collaboration.

Many of these open-source providers also take into account the latest technologies when it comes to sustainability, ensuring that global AI frontiers have at least some base level of energy efficiency.

Multimodal Generative and Agentic AI: Hardware Requirements and AI Trends

AI used to be able to carry out single-mode tasks. Think of the kind of models that are purely dedicated to text generation or image recognition. But now, far more is capable. Two key trends include multimodal AI and agentic AI, each of which has unique hardware requirements.

Multimodal AI: What It Means for Hardware

Multimodal AI systems that process text, images, audio, and video require specialized hardware capable of handling diverse data types simultaneously. The problem is that these new capabilities mean greater pressure on bandwidth and latency. You may even need parallel processing units.

A lot of the hyperscalers we’ve already mentioned above are working on dedicated multimodal processing units (MPU) to help with these issues, but we are still a ways off. If you are in the AI field, this is one of the many developments to watch in 2026.

If you are not sure if you will benefit from this technology, consider consulting with an AI expert.

The Rise of AI Agents: Hardware Implications

AI agents are massive systems that need minimal human intervention. They can start and complete incredibly complex tasks all on their own. We are really looking forward to seeing what future AI agents can do, but for now, their demands are not being met very well.

For these systems to work properly, they need real-time, low-power hardware optimized for decision-making, natural language processing, and perception. There could be many opportunities here, as companies from all over the world scramble to advance technology enough that these agents can complete more and more tasks using optimized hardware stacks.

Robotics & Physical AI: The Hardware Frontier

Robotics seems like the stuff of the future, but the reality is that there has been a big shift towards robotics and physical AI, moving from simple, repetitive tasks, like the kind you might know factory robots perform, to more complex work. One of the best examples we’ve seen recently includes autonomous drones.

These systems need specialized chips that include System-on-Chip (SoC) architectures. These allow for the integration of various units on a single chip, which helps reduce latency and overall power requirements. This is incredibly useful in battery-operated robotics or other tools that don’t have constant access to unlimited power.

Security, Privacy & Compliance: The Hidden Cost of AI Hardware

Security and data privacy are major concerns in the world at the moment, and compliance requirements are not only getting stricter but also being enforced more. AI hardware is no exception to these rules.

AI Hardware and Privacy Laws

The GDPR, CCPA, and other emerging laws, such as the AI-specific EU AI Act, all play a role in the way that companies approach privacy and data protection. While many of the standards set up by these regulatory bodies are targeted at software and cloud technology, they can also influence hardware.

Privacy-by-design principles could require hardware-level considerations like encryption, security boot processes, and even physical access controls. This is especially true in the sectors we have already mentioned, like healthcare, finance, and anything else where personal data is dealt with.

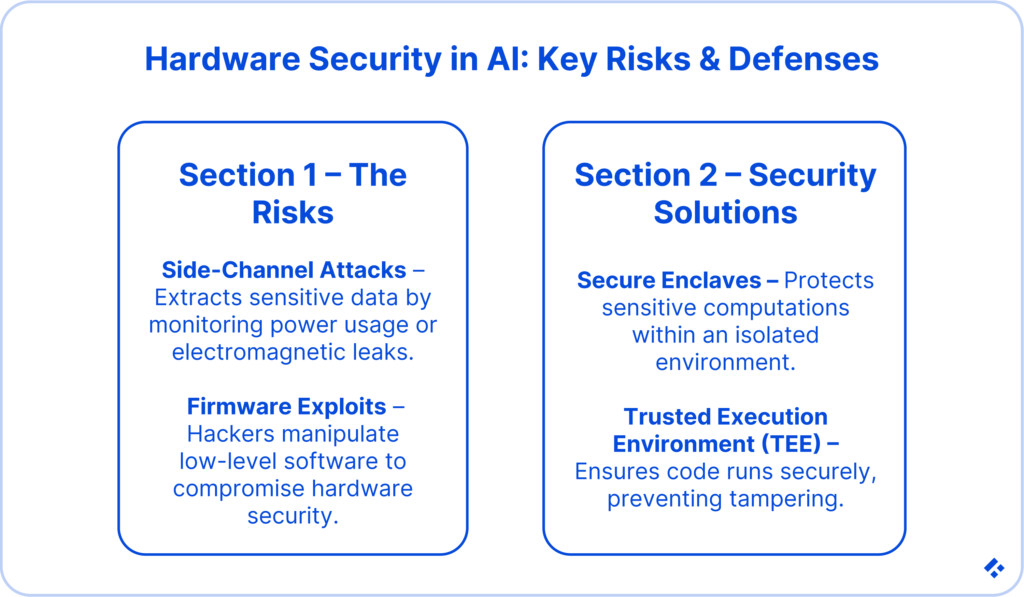

Hardware-Level Security in an AI-Driven World

Side-channel attacks, firmware exploits, and supply chain tampering are all security risks that AI hardware may be vulnerable to. It is important that companies taking up some part of the market share in producing hardware components like chips need to implement security features like secure enclaves, trusted execution environments (TEE), and hardware-based identity modules.

In fact, many of these security features are now becoming industry standards, especially in enterprise AI systems.

We’ve also seen collaborations with cybersecurity firms. These collaborations are often used to develop real-time threat detection systems that can be embedded in silicon chips. The idea is that these systems will act as the first line of defence, giving users more peace of mind.

Where AI Hardware Manufacturing is Moving

AI hardware is becoming a global industry. But, like many other sectors that deal with physical products, quality is not always consistent, and imports/exports are at the mercy of sanctions and tariffs.

The U.S.-China AI Hardware Trade War

The U.S. has imposed strict controls on the export of advanced AI chips and manufacturing equipment to China. There are many import restrictions, tariffs, and even increased investments to try to promote domestic manufacturing in the respective countries.

It is possible that the restrictions between these two countries are going to promote the further success of places like India, Vietnam, and Malaysia, which are emerging as alternative hubs for AI hardware manufacturing. Many of these countries are not subject to regulations that are as strict as those between the U.S. and China, and benefit from internal initiatives.

Europe’s Push for AI Hardware Sovereignty

Europe is investing heavily in local semiconductor production. The aim of these initiatives, like the European Chips Act, is to reduce dependency on foreign suppliers. This will not only reduce costs if done correctly, but will also provide job opportunities within the EU.

If you want to know more about how you may benefit from AI hardware or need someone to help you build your automation systems, you are in the right place.

At Trio, we hire the top 1% of applicants and provide instant access to our clients in the most cost-effective manner. To promote your business’s growth through technology, contact us to schedule a free consultation and get an AI expert on your team!