Handling sensitive data has become one of the trickiest balancing acts for fintech companies.

On one side, you’ve got regulators demanding airtight compliance; on the other, customers expect smooth, instant transactions.

Growth only makes the tension sharper. You may find that what worked for a few thousand users no longer holds up against millions of records, multiple payment partners, and auditors who want to see every system diagram.

Data breaches and compliance failures do more than generate fines. They erode customer trust and can stall a product roadmap overnight.

Building your own tokenization framework may seem like the responsible answer. Still, the cost in engineering hours, specialized expertise, and ongoing audits can quietly drain resources that should be fueling product development.

Many late-stage startups learn this the hard way when PCI-DSS audits balloon into multi-month ordeals.

Tokenization as a Service (TaaS) outsources the heavy lifting to a token service provider, allowing you to replace sensitive payment and customer data with tokens that hold no exploitable value.

The original data is stored securely, your audit scope shrinks, and your teams can keep their attention where it belongs: shipping features, scaling infrastructure, and preparing for the next growth milestone.

Our developers have experience dealing with companies in a variety of stages, especially in the financial services industry. They can not only advise you on the best way to tokenize your assets, but can also help you ensure integrations are secure.

Understanding Tokenization

It helps to be clear about what tokenization actually means in practice.

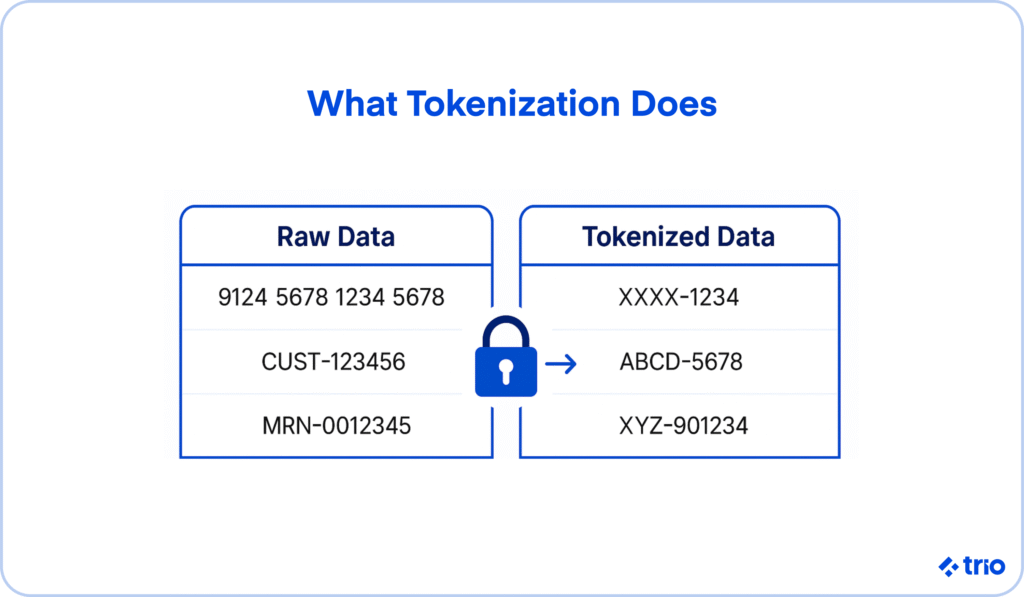

At its core, tokenization is about substituting sensitive values, card numbers, account IDs, and patient records with meaningless placeholders.

These placeholders, or tokens, can move freely through your systems without exposing the original data to unnecessary risk.

What is Tokenization?

A token looks like any other string of characters, but it carries no intrinsic value. If someone were to intercept it, they couldn’t reverse it into a valid card number or customer ID.

The real data sits in a separate, highly restricted environment. This separation is what makes tokenization so appealing to financial institutions that handle regulated data.

How Tokenization Works

When sensitive information enters the system, such as a payment card during checkout, it’s immediately sent to the tokenization service. The service generates a unique token and returns that token to the application or merchant system.

Like we’ve already mentioned, the actual card number is stored elsewhere, often in an encrypted vault, and only the service can de-tokenize it when necessary.

There are, however, different flavors of this process.

Vaulted tokenization relies on a centralized, encrypted store that maps tokens back to original data. It’s simple to reason about, but it can introduce latency and create a single, high-value point of risk.

Vaultless tokenization, by contrast, uses cryptographic algorithms to generate tokens without storing the sensitive data at all. This approach can reduce compliance scope and may lower operational overhead, though it demands more advanced cryptography and careful key management.

The Role of a Token Service Provider

Some teams try to build an in-house framework, but that usually requires cryptography specialists, compliance officers, and infrastructure engineers working in lockstep.

It’s not impossible, but it does pull energy away from product development, which is already under pressure in a scaling fintech.

What a Provider Actually Does

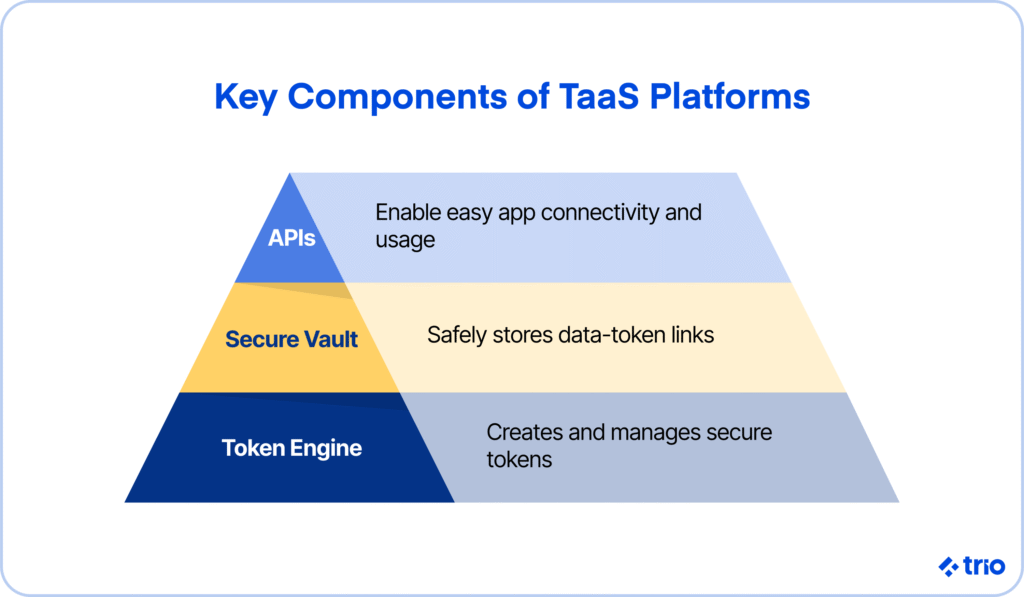

A token service provider (TSP) takes on the responsibility of generating tokens, maintaining the secure mapping back to the original data, and handling de-tokenization when it’s legitimately required.

They operate the vault (if one is used), implement strong encryption, enforce access controls, and often provide real-time monitoring to detect anomalies.

From the outside, this looks seamless: your systems swap in tokens and rarely touch raw sensitive data at all.

Why the Choice of Provider Matters

Not all providers offer the same level of assurance.

Some emphasize ease of integration but have weaker audit support; others focus on regulatory alignment across multiple jurisdictions. When you’re choosing a partner, you want to look for clear commitments around:

- Certifications: PCI DSS compliance is a minimum; ISO/IEC 27001, SOC 2, or regional frameworks may also apply.

- SLAs and reliability: If your transaction volume spikes during product launches, will the service scale with you?

- Data residency: For cross-border fintechs, the location of the underlying vault or key store can affect compliance obligations.

- Support and expertise: Providers with fintech-native engineers can often anticipate PCI audit requirements better than a general security vendor.

A provider who aligns with your regulatory footprint and product roadmap can shorten audit cycles, reduce engineering distraction, and ultimately help you move faster without cutting corners on security.

Benefits of Tokenization as a Service (TaaS)

Tokenization as a Service is about solving pain points that can quietly erode both security and velocity if left unchecked.

A provider can’t magically remove every risk, but the shift in responsibility, from your engineering team to a specialized service, changes the economics of data protection noticeably.

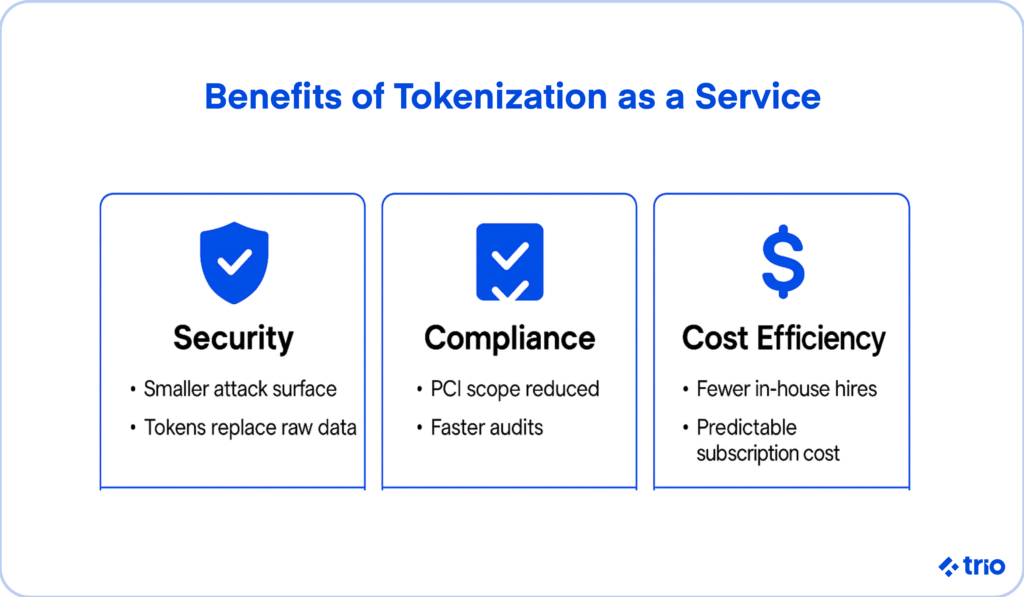

Enhanced Data Security

Replacing live card numbers or account identifiers with tokens means that most of your systems never see the original data at all.

If attackers were to compromise a database or intercept traffic, what they’d find appears to be gibberish.

Of course, no approach is perfect; token vaults can still be targeted, and vaultless models depend heavily on sound cryptography, but the exposure surface shrinks dramatically compared to storing raw values across multiple applications.

If your fintech is handling millions of transactions, this narrower attack window can mean the difference between a minor incident and a catastrophic breach.

Compliance with Regulations

PCI DSS audits are a recurring source of stress for scaling fintechs.

Tokenization reduces the number of systems considered in scope because sensitive cardholder data is kept out of most of your infrastructure.

The result is fewer controls to implement, fewer systems to document, and shorter audit timelines.

Something similar applies in other regulated contexts, such as HIPAA in healthcare, GDPR in Europe, or even SEC rules in capital markets.

Cost-Effectiveness for Businesses

Implementing tokenization requires hiring engineers with niche expertise, budgeting for cryptographic key management, and preparing for round-the-clock compliance monitoring.

For a mid-sized fintech trying to hit aggressive product deadlines, this can quietly consume headcount and delay launches.

While we can provide the fintech-savvy expertise to augment your team, you will still need to cover the costs of the additional hires.

By shifting to a service model, you turn those capital and operational costs into a subscription, one that flexes with transaction volume.

A helpful way to think about it is the trade-off between control and focus.

Running everything in-house maximizes control but dilutes focus; leaning on a provider gives up some control but allows your teams to keep momentum where it matters.

Use Cases for Tokenization

Examining common examples of tokenization in use reveals how it reshapes day-to-day operations in specific industries.

The details vary, but the common thread is this: by moving sensitive values out of the spotlight, you lower both business risk and compliance friction.

Tokenization in Digital Payments

We’ve already mentioned this example above. It is likely the most common use that you will encounter.

Every fintech that handles card transactions faces the same challenge: protecting cardholder data while keeping checkout seamless.

Tokenization ensures the card number is replaced before it ever touches your internal systems. To the customer, the experience is invisible; to your auditors, it’s an apparent reduction in PCI scope.

Protecting Sensitive Data in Healthcare

Records contain not only payment details but also clinical history, identifiers, and insurance information.

Tokenization enables doctors and administrators to work with patient profiles without exposing raw data across all systems.

In practice, this may mean a hospital can run analytics on treatment outcomes using tokenized patient IDs, confident that the real identities remain protected under HIPAA and GDPR requirements.

Tokenization in E-commerce Platforms

Online retailers process a constant stream of sensitive checkout data. Without tokenization, each transaction adds to the liability surface. With tokenization, the retailer holds only tokens, while the actual card details remain in a provider’s secure environment.

That doesn’t just reduce the chance of a headline-making breach, it also reassures customers who are increasingly cautious about where they type their card numbers.

Tokenization of Real-World Assets (RWAs)

Real estate, bonds, and even art collections are being represented as digital tokens. The logic is similar: it makes an otherwise illiquid or sensitive asset easier to manage and trade by abstracting the underlying record into a token.

Providers in this space often combine tokenization with blockchain platforms, offering investors transparency and secondary market liquidity.

Tokenization Platforms and Services

The next challenge is choosing how to implement tokenization.

The market is crowded with providers making similar promises, yet the technical and regulatory details behind those promises can vary widely.

You need to find a platform that can withstand the pressure of audits, customer volume, and evolving product demands.

Choosing the Right Tokenization Platform

When evaluating tokenization platforms, it is beneficial to move beyond marketing language and focus on measurable factors.

Security certifications like PCI DSS compliance are table stakes, but you may also want to see ISO/IEC 27001, SOC 2, or regional frameworks that apply to your markets.

Our developers are immersed in the global fintech industry. They are up to date on the constantly shifting regulations in the United States, as well as some of the most common places you may consider expanding to, like Europe and Latin America.

Beyond certifications, consider the encryption methods in use, whether the provider supports vaulted or vaultless tokenization, and how they handle key management.

A practical way to evaluate is with a checklist. Questions you might ask:

- Does the platform demonstrably reduce PCI scope?

- How does it manage latency and throughput under peak load?

- What happens if you need data residency in multiple regions?

- Are there clear SLAs, and how binding are they in practice?

- Does the provider offer ongoing audit support, or is that left to you?

Integration with Payment Systems

Integration is often the make-or-break factor.

A system that looks perfect on paper but requires months of reengineering to connect with your payment gateways or POS infrastructure quickly loses appeal.

The stronger platforms provide straightforward APIs, SDKs, and documentation that let you token-enable transactions without overhauling your core stack. All you need is a skilled developer who has done similar integrations before, and you are practically guaranteed success.

We’ve seen fintech cut their PCI preparation time by weeks simply because the provider’s integration pulled entire subsystems out of scope.

It’s worth noting that integration ease can vary by provider.

One platform may offer plug-and-play support for Stripe and Adyen, while another demands more custom work.

Evaluating Token Service Providers

Evaluating providers encompasses a range of factors, from their features to their organizational maturity.

A vendor with fintech-native engineers and compliance experts may anticipate your needs better than a generic data security company.

You also need to think about their track record in scaling with clients, their responsiveness during audit season, and their transparency in incident response.

Ask for client references, look for evidence of successful PCI audits, and press on details that matter to your specific growth path.

Compliance and Regulatory Considerations

Don’t just think about the impact that TaaS will have on your current compliance and regulatory considerations. You need to consider the implications of scaling and spreading to different locations.

Understanding PCI DSS Requirements

For payment data, PCI DSS remains the dominant standard pretty much globally.

Like we’ve mentioned, without tokenization, every server, database, and process that touches card data falls into PCI scope.

With tokenization, only the tokenization environment itself (typically the provider’s platform) needs to meet the complete checklist of PCI controls.

That’s not just a paperwork win; it means engineers can focus on product code instead of chasing PCI remediation tickets.

Regulatory Frameworks for Tokenization

Healthcare teams need HIPAA compliance, EU firms live under GDPR, and anyone offering securities or investment products may face SEC or FINRA rules.

Tokenization can help in each case by ensuring raw data is held in a tightly controlled environment rather than scattered across analytics tools or reporting pipelines.

Of course, the specifics vary. GDPR, for example, may still classify tokens as personal data if they can be linked back to an individual, which means tokenization helps but doesn’t eliminate obligations around consent and data subject rights.

For capital markets, newer frameworks around crypto-securities and digital assets are starting to reference tokenization directly, though the regulatory landscape is still moving.

If you are experimenting with tokenized assets, you should expect regulators to pay close attention to both the custody of tokens and the legal enforceability of the underlying asset claims.

Ensuring Continuous Compliance

The first audit after adopting tokenization may feel like a relief, but staying compliant is an ongoing process.

Providers can play a critical role here, offering real-time monitoring, proactive alerts, and audit reports that keep you ahead of evolving standards.

This doesn’t mean that your responsibility vanishes, though. Employees require training, access rights must be reviewed, and processes need to be updated whenever regulations change.

Future Trends in Tokenization

The way providers design and deliver these services is shifting as both threats and opportunities evolve.

Some of these developments are already practical, while others feel more like bets on where the industry might land in the next five years.

Advancements in Tokenization Technology

Encryption methods continue to improve, and providers are experimenting with algorithms designed to resist future threats like quantum computing.

It’s not yet clear when quantum attacks will become a realistic danger, but many security teams see “quantum-safe” tokenization as a way to future-proof critical systems.

On a more immediate level, we’re also seeing movement toward lower-latency tokenization that can handle thousands of transactions per second without slowing down checkout or payment flows.

In this area, vaultless approaches appear to have an edge.

Impact of Blockchain on Tokenization

Blockchain has become an increasingly common backdrop for tokenization, particularly in asset markets. We’re even seeing central banks getting involved, encouraging stricter regulations like the GENIUS Act and the EU’s MiCA.

Instead of simply storing tokens in a vault, providers are issuing blockchain-based representations of assets ranging from real estate to debt instruments.

The appeal lies in transparency and liquidity, allowing investors to verify ownership and potentially trade tokens on secondary markets. Still, this space comes with its own risks.

While there have been massive improvements, legal frameworks around crypto-securities remain patchy, and relying too heavily on blockchain hype may create more questions than it answers.

Emerging Use Cases and Innovations

IoT manufacturers are using tokens to anonymize device identifiers before streaming data to the cloud, reducing privacy risks in consumer products.

Digital identity systems are also experimenting with tokenization to give users more control over how much of their personal data they expose.

These are still emerging ideas, but they hint at a broader trajectory: tokenization becoming a foundational security layer, not just a compliance tool.

Conclusion

Building tokenization in-house is possible, but it often pulls engineering and security teams away from growth priorities.

If you do want to go this route, staff augmentation might be a temporary solution, but it is always important to consider all your options.

Tokenization as a Service offers an alternative: outsource the complexity to a provider who lives and breathes PCI-DSS compliance, data security, and audit readiness.

By doing so, you shrink your risk surface, reduce audit scope, and free your teams to keep pushing the product forward.

The decision isn’t whether to protect sensitive data; that’s non-negotiable.

Our expert fintech developers can integrate into your team, assisting with everything from your decision-making process to getting your tokenization solutions up and running efficiently.

Reach out to get in touch!

Frequently Asked Questions

Tokenization in data security is the process of replacing sensitive information with non-sensitive tokens that have no exploitable value.

A token service provider stores sensitive data securely, generates tokens for use in your systems, and manages the detokenization process when needed.

Yes, tokenization is PCI DSS compliant because it reduces the number of systems in scope, simplifying audits and lowering compliance costs.

The difference between vaulted and vaultless tokenization is that vaulted methods store sensitive data in an encrypted vault, while vaultless models use algorithms without storing the data at all.

Fintechs should use Tokenization as a Service instead of building in-house because it reduces engineering overhead, speeds up compliance readiness, and cuts long-term operational costs.

Yes, tokenization can be used outside of payments, including in healthcare, e-commerce, IoT, and even for tokenizing real-world assets like real estate or securities.