Reliability tends to hide in plain sight. When systems behave as expected, no one notices. When they don’t, it’s chaos.

In fintech, it may come in the form of a failed payment, a stalled API call, or a compliance flag blinking red at 2 a.m.

That’s why site reliability engineering (SRE) exists.

It brings a practical, engineering-led framework to keeping complex systems predictable and trustworthy.

SRE applies software engineering principles to operations so reliability can be measured, improved, and scaled, not just hoped for.

At Trio, our engineers specialize in fintech software development. We create systems that move real money. Reliability is critical.

If you are interested in getting these skilled developers, who understand industry nuances, onto your team, we can provide them in as little as 48 hours!

Let’s unpack the core SRE principles, the best practices that sustain reliable fintech platforms, and how metrics and the four golden signals give teams clarity when things start to wobble.

What Is a Site Reliability Engineer?

A site reliability engineer sits somewhere between a developer, an operations specialist, and a systems thinker. Their job is to make sure the software not only works, but keeps working, especially under pressure.

They use data, measuring everything that matters before finalizing their decisions. And they automate whatever can be done faster and more accurately by machines than by people.

The Role and Responsibilities of an SRE

At its core, the SRE model treats reliable services as a solvable engineering problem.

SRE teams write code, design automation tools, and build self-healing systems that prevent small issues from becoming major outages.

They also define what “good” looks like through service level objectives (SLOs) and metrics that everyone, from engineers to executives, can understand.

A strong site reliability engineer develops tools that automatically correct recurring issues, but they also dig into logs and traces to understand why those issues appear in the first place.

In fintech, this attention to detail is especially critical. A few milliseconds of latency might not sound like much, but at scale, it can delay hundreds of thousands of payments.

Bridging Development and Operations

SRE grew out of the same need that created DevOps, to break down walls between development and operations. But while DevOps emphasizes collaboration, SRE adds a layer of accountability through measurement.

Instead of vague promises about uptime, SRE teams define precise goals using metrics and error budgets.

They measure, track, and report reliability like a feature, with transparency that helps engineering teams and business stakeholders align.

When both sides know the limits, developers can release features faster, and operations teams can support that speed without guessing how much risk they’re taking on.

Measuring Success With Reliability and Availability

Every SRE team measures success differently, but it always comes back to one thing: how well the system performs for real users.

Take a lending app that promises quick approvals. The service level indicator (SLI) might be “time to loan decision,” while the SLO could be “99.95% of applications processed within three seconds.”

If processing starts slowing down, the error budget, that small margin of acceptable failure, begins to shrink. When it runs out, the team stops releasing new features and focuses on restoring performance.

It’s a simple but powerful mechanism that shifts reliability from guesswork to accountability.

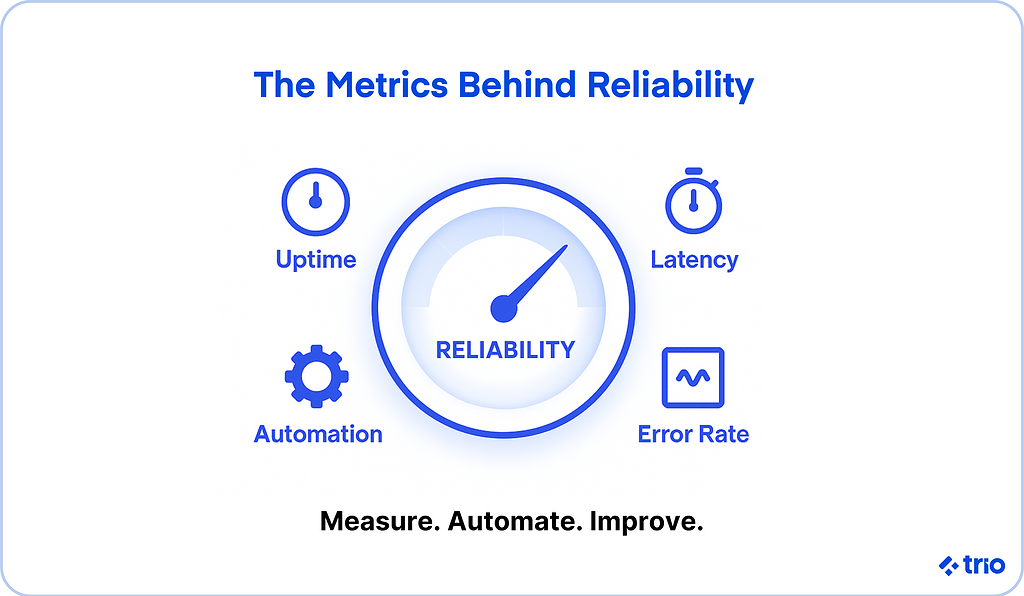

As Trio’s engineers often remind clients, metrics make reliability measurable, and measurability makes it improvable.

Related Reading: Startup CTO Roles, Responsibilities, and Challenges

Core Principles of SRE

The principles of site reliability engineering offer structure in an environment defined by change.

They help SRE teams balance stability and innovation without tipping too far toward either side.

Embracing Risk and Error Budgets

Perfect systems don’t exist, and pretending they do only leads to frustration. The error budget acknowledges that some level of failure is acceptable, even healthy, if it enables progress.

If your SLO allows 0.1% downtime, that’s your room to experiment.

It gives developers the psychological permission to move fast while still respecting the limits of system reliability.

When the budget’s gone, so is the freedom to deploy. It’s a natural feedback loop.

This approach creates a shared sense of responsibility.

Fintech companies, especially those handling regulated data, often find this balance essential to maintaining system reliability without freezing innovation altogether.

Service Level Indicators (SLIs) and Objectives (SLOs)

SLIs and SLOs are the twin pillars of measurable reliability.

The SLI defines what’s being observed, say, latency, throughput, or error rate, while the SLO defines what’s acceptable.

A well-crafted SLO might read: “99% of API requests complete within 200 milliseconds.” Anything less signals a problem worth investigating.

SRE teams rely on these numbers to decide where to spend their time.

If SLIs drift below target, they know it’s time to stabilize before shipping new features. And when they stay healthy, they have data-backed confidence to experiment. It’s a simple structure that keeps teams aligned and honest.

Eliminating Toil Through Automation

Toil is the silent productivity killer. It’s the repetitive tasks that keep the lights on but rarely move things forward, the manual restarts, the server patching, the endless alert acknowledgments.

The best SRE practices aim to automate every task that doesn’t require human judgment.

Whether through scripts, pipelines, or configuration management tools like Terraform or Ansible, SRE teams focus on replacing repetition with reliability.

It’s about mental load. Every minute an engineer spends resetting a service is a minute they can’t spend improving it.

At Trio, we’ve seen fintech platforms reclaim entire sprint cycles simply by automating their deployment checks or recovery scripts. The time saved compounds quickly.

Simplicity as a Reliability Multiplier

It’s tempting to add complexity as systems grow. More dashboards, more dependencies, more “just in case” redundancy. Yet in practice, every added moving part increases fragility.

SREs often say that complexity fails silently, until it doesn’t. Keeping things simple makes failures easier to spot and fix. It also keeps incident response grounded in logic rather than guesswork.

The core principles of SRE treat simplicity as a multiplier for reliability. It appears almost too obvious, but it’s worth repeating: the easiest systems to automate and maintain are the ones you can explain on a whiteboard without running out of markers.

Metrics and Measurement in SRE

Without metrics, you’re flying blind. But with too many, you’re drowning in noise. SRE is about finding the right balance.

Why Metrics Matter in SRE

Metrics turn intuition into evidence. They show what’s happening instead of what people think is happening. But not all metrics are equally useful.

Some look impressive but tell you very little, a trap many teams fall into early on.

A good metric ties directly to user experience. Tracking “average latency,” for instance, might hide the handful of users facing five-second delays. Measuring the 95th percentile response time paints a truer picture.

In fintech, that kind of detail isn’t optional. A slow trade confirmation or delayed transfer can have financial consequences.

SRE teams use these metrics for accountability, too.

They help explain reliability in language that makes sense to executives: “We processed 10 million transactions yesterday with 99.97% success.” That’s the kind of clarity good data brings.

Business Metrics vs. System Metrics

Business metrics measure what users care about: success rates, completion times, and conversion ratios. System metrics measure the plumbing underneath, CPU, memory, queues, or thread counts.

Looking at only one side can mislead you.

A system might appear healthy from a CPU perspective, but still drop payments because of an upstream dependency. Conversely, slightly elevated resource use might be fine if users aren’t feeling it.

An effective SRE program connects both layers so teams can see how performance and user experience interact.

In regulated environments like fintech, that correlation also helps with auditability, proof that reliability is maintained and monitored responsibly.

Leading and Lagging Indicators

Some metrics warn you before users notice problems (leading indicators), while others confirm the impact after the fact (lagging indicators).

For example, rising latency in an API might suggest an issue long before customers start complaining. By the time conversion rates or app ratings drop, it’s already too late.

Good SRE practices track both, so they can react early while still validating that fixes work later.

Balancing these perspectives is what separates teams that recover quickly from those that scramble after every incident.

Effective Metrics for Monitoring and Observability

Metrics alone aren’t enough. What matters is how well you can see and interpret them. That’s where observability comes in.

In short, monitoring tells you when something breaks; observability helps you understand why.

A solid observability platform brings together metrics, logs, and traces into one coherent view. You might see that high latency on your loan approval API isn’t a database issue at all, but a slow authentication service two hops away.

Modern monitoring tools like Grafana, Prometheus, Datadog, and OpenTelemetry make this easier, though not effortless. There’s still judgment involved, deciding what to track, what to ignore, and when an alert actually matters.

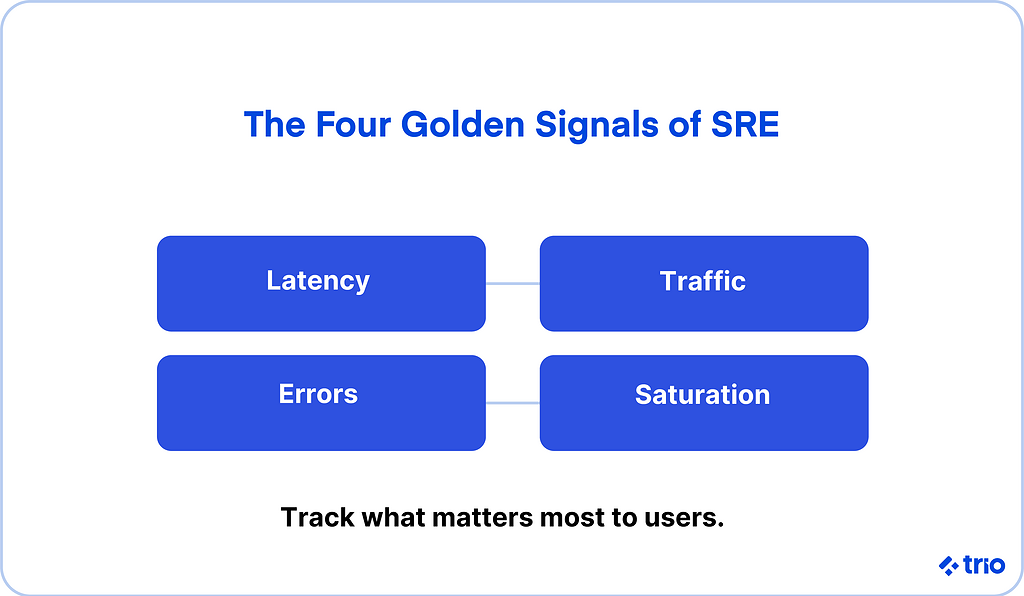

Golden Signals: The Foundation of SRE Monitoring

Once metrics are in place, the next challenge is focus. Too many signals can blur the picture. The four golden signals offer a practical way to cut through the noise.

Defined as latency, traffic, errors, and saturation, they’re simple enough for any SRE team to track but powerful enough to describe nearly every production issue that matters.

Used consistently, they turn monitoring into a shared language. They tell you what’s normal, what’s urgent, and what’s about to go wrong, often before the customer ever feels it.

Applying Golden Signals in Practice

Understanding the four golden signals is one thing. Using them effectively is another.

The real power of these metrics appears when teams integrate them into day-to-day workflows, dashboards, alerts, and decisions about where to spend reliability effort.

Setting Up Dashboards for Golden Signal Visibility

Dashboards are the heartbeat of any SRE team. They bring together latency, traffic, errors, and saturation into a single view that reveals the system’s overall health. But the best dashboards don’t just display numbers, they tell a story.

Imagine a fintech payments platform: during peak hours, latency climbs slightly, but throughput holds steady, and errors stay flat. That pattern might suggest saturation at the API layer, not a total failure. If latency suddenly spikes while traffic drops, though, it’s likely something more serious, perhaps a deployment gone sideways or a database lock.

SRE best practices encourage using tools like Prometheus, Grafana, or Datadog, all of which we have already mentioned, to visualize this data in context.

Instead of overwhelming engineers with 50 panels, focus on the few that reflect service reliability from the customer’s point of view.

And always annotate dashboards with real-world events, releases, incidents, or even weather anomalies that affected network traffic.

Correlating Golden Signals With SLIs and SLOs

Metrics don’t exist in isolation. The golden signals feed directly into your service level indicators (SLIs), which in turn define your SLOs.

If your latency SLI is “95% of transactions complete in under 200 ms,” the golden signal for latency is how you track that number in real time. When the graph trends upward, your error budget begins to shrink, a subtle but important warning that reliability is slipping.

This feedback loop helps SRE teams move from reacting to predicting. When SLIs and SLOs are tied directly to the golden signals, alerting becomes smarter and less noisy. Instead of hundreds of red notifications, you get one clear message: “Error rate exceeded target for the past five minutes.” Simple, actionable, and focused.

Golden Signals in Multi-Service Architectures

Modern software systems rarely live in isolation. Fintech applications, in particular, depend on sprawling distributed systems, microservices, APIs, third-party integrations, and external banking partners. Each link adds another potential point of failure.

Applying the four golden signals across these services requires careful coordination. If the payment gateway slows down, it affects loan processing, fraud detection, and reporting dashboards downstream. Without consistent metrics, you’ll see the symptoms but not the source.

The best SRE practices establish shared definitions for each signal across services, one latency threshold, one error format, and one alerting policy. It’s tedious work up front, but it saves hours of confusion during an incident response when clarity matters most.

Automation and Continuous Improvement

Automation is how teams act on what they see. The goal is to free your people from repetitive work so they can focus on solving harder problems.

Smarter Alerting and Escalation

Alert fatigue is real. Too many alerts, and teams start tuning them out. Too few, and real issues go unnoticed. The balance lies in context and automation.

Smarter alert systems use historical data to decide when to escalate and when to stay quiet. They might notice that a latency spike resolves itself after 30 seconds every morning during a backup process, and automatically suppress it next time.

SRE teams can also use auto-remediation scripts to handle known patterns. If CPU saturation passes 90% for more than five minutes, a script could trigger a new instance or throttle non-critical jobs. The goal is to act quickly without burning out the people behind the dashboards.

It’s worth noting that automation doesn’t eliminate the need for judgment. It just moves humans higher up the decision chain, where their expertise has the most impact.

Auto-Remediation and Self-Healing Systems

The dream of every site reliability engineer is a system that fixes itself before anyone notices it’s broken. While full autonomy is rare, partial auto-remediation is increasingly common.

Picture an SRE team maintaining a credit scoring service. When traffic surges, autoscaling rules provision extra instances automatically. If those new nodes fail health checks, the system rolls back and sends a concise summary to Slack: “Scaling event failed, retry scheduled.” Nobody had to wake up for it.

This kind of automation takes time to build, but it’s one of the most powerful ways to improve system reliability. Done well, it transforms reliability from reactive maintenance to proactive design.

Continuous Learning From Incidents

Every incident response is a learning opportunity if teams treat it that way. Too often, post-mortems turn into blame sessions or checklists. SRE reframes them as experiments.

After an outage, the question isn’t “Who broke it?” but “What signals did we miss?” Maybe the alert fired too late, or maybe it fired correctly but was buried in noise. The fix might be technical, or it might be cultural, a communication gap between development and operations teams.

High-performing SRE teams keep lightweight records of incidents, actions taken, and lessons learned. They use those records to update automation, adjust SLOs, or refine monitoring systems. The cumulative effect of this process is enormous.

Continuous learning is how reliability becomes part of the company’s DNA, something Trio emphasizes when helping fintech teams mature their reliability culture. Good tooling matters, but good habits matter more.

Building Reliable, Observable Systems

Even the most sophisticated monitoring setup means little without a culture that values transparency.

The Culture of Observability

An observable system is one that can explain itself. You should be able to ask, “Why did this transaction fail?” and find the answer without guesswork.

Building that culture starts with empowering engineers to question assumptions. If latency graphs look fine but customers still complain, dig deeper. Maybe the system isn’t instrumented at the right layer, or maybe the alert thresholds no longer match reality.

At its best, observability blurs the line between development and operations. Everyone becomes part of the reliability conversation, from QA testers to data engineers. Teams that work this way tend to catch subtle issues long before they escalate.

Tools for Monitoring and Observability

Modern observability tools can feel endless: Prometheus, Grafana, OpenTelemetry, Honeycomb, and Datadog are really just scraping the iceberg. Each brings its own strengths. The trick is not to collect everything but to collect what’s meaningful.

Some teams obsess over metrics but forget context. Others gather terabytes of logs and never look at them again.

A balanced stack focuses on actionable insight: traces for where time is spent, logs for what went wrong, metrics for how often it happens.

Centralizing this data helps teams respond faster. When you can trace a slow payment through every microservice, the root cause stops being a mystery. That visibility builds trust across teams and with customers.

In fintech, full-stack observability is becoming a baseline expectation rather than a luxury.

Chaos Engineering and Capacity Planning

Reliability improves fastest when teams stop treating failure as the enemy. Chaos engineering introduces failure on purpose to reveal weak points before they cause trouble.

The Purpose of Chaos Testing

Running controlled failure experiments may sound risky, and it is. But it’s a smarter risk than waiting for the next unplanned outage. By intentionally breaking things, killing processes, injecting latency, or blocking network traffic, SRE teams learn how systems behave under stress.

In fintech, that insight is invaluable.

You might discover that your failover strategy looks fine on paper, but can’t handle peak-hour load. Or that an overlooked dependency makes recovery slower than expected.

Chaos tests expose what real users would experience during a worst-case scenario, and they let you fix it ahead of time.

Integrating Chaos Experiments Into CI/CD

The most effective teams treat chaos as part of release engineering. Tools like Gremlin, Chaos Mesh, or LitmusChaos can integrate directly into CI/CD pipelines. Before every release, they simulate failures and confirm the system can recover gracefully.

It doesn’t have to be elaborate. Even small experiments, say, introducing 200 ms of extra latency on a key API, can surface surprising fragilities.

The goal is to learn continuously, in production-like environments, with minimal risk.

Capacity Planning and Performance Metrics

While chaos testing explores failure, capacity planning explores growth. The two complement each other. SRE teams look at patterns in metrics, CPU saturation, queue depth, and throughput trends to forecast when the current infrastructure will hit its limits.

Good planning ties technical scaling to business expectations.

If a product expects a 40% traffic increase next quarter, the team ensures resources, SLOs, and budgets scale accordingly.

Metrics like mean time to recovery (MTTR) or uptime percentiles round out the picture, turning reliability into a continuous improvement cycle.

Fintech systems, especially those tied to regulatory SLAs, can’t afford guesswork here. Planning capacity well means customers never see the seams, even when usage doubles overnight.

Conclusion: Reliability as a Fintech Imperative

Reliability is the result of deliberate practice. Site reliability engineering best practices combine data, automation, and human judgment to keep that practice sustainable.

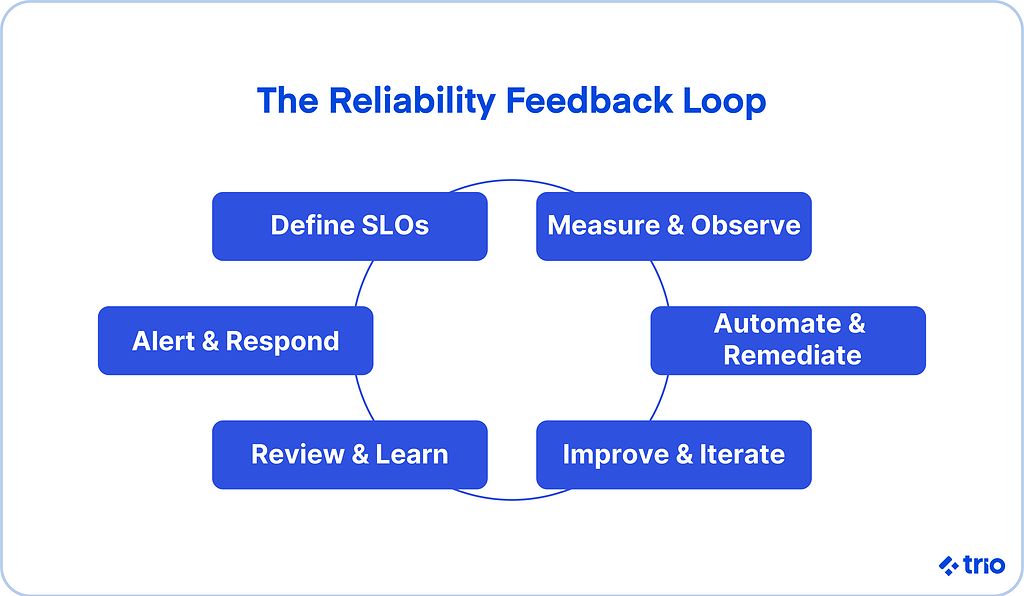

Whether it’s defining clear SLOs, using metrics wisely, or building feedback loops that turn failure into progress, the SRE approach creates systems and teams that get stronger with time.

At Trio, our engineers apply these same principles of site reliability engineering to help fintech platforms stay fast, compliant, and resilient.

To access our fintech specialists: get in touch!

FAQs

What does a site reliability engineer do?

A site reliability engineer applies software engineering to operations, automating systems and using metrics to keep services stable and scalable.

What are the core SRE principles?

The core SRE principles include managing risk with error budgets, defining SLOs, eliminating toil through automation, and designing for simplicity.

What are the four golden signals in SRE?

The four golden signals in SRE are latency, traffic, errors, and saturation, the key metrics for monitoring system health.

How does automation improve system reliability?

Automation improves system reliability by removing repetitive manual work, speeding recovery, and preventing human error during operations.