In every modern organization, data piles up faster than anyone can make sense of it.

You collect logs, transactions, metrics, and customer interactions from dozens of systems, and before long, the question isn’t how to get more data, it’s how to actually use it.

Many teams find themselves drowning in their own success, with valuable information trapped in systems that don’t talk to each other.

This is especially true for industries like fintech, where speed and accuracy define competitive advantage.

A delay of even a few seconds in a payment network or risk model can ripple through millions of dollars in transactions.

Acting on real-time data rather than waiting for overnight reports is quickly becoming a necessity.

That’s where real-time data lakes come in.

By combining the scalability of a data lake with the instant responsiveness of real-time analytics, businesses can move from reactive to proactive.

They learn from data as it is being produced.

At Trio, we’ve seen how fintech firms, in particular, benefit from this shift: the moment you start connecting streaming data to decision systems, your product’s intelligence increases exponentially. But you need to make sure that you have secure, compliant data management systems in place.

Our fintech specialists can help you do this. And, instead of spending months finding a full-time employee, we can provide you with a handful of portfolios in as little as 48 hours for staff augmentation and outsourcing.

This article takes you through that transformation, from understanding what a data lake really does to integrating real-time analytics, building pipelines, and applying AI in production environments.

Understanding Data Lakes

Before you can engineer for real-time analytics, you need a clear grasp of what a data lake actually represents.

Essentially, it gives you one place to store everything, structured, semi-structured, or unstructured, without forcing a rigid schema upfront.

What Is a Data Lake?

A data lake is a central repository where you can store raw data in its native format, whether it comes from databases, sensors, or SaaS systems.

Unlike a traditional data warehouse, which is a data platform that demands pre-cleaned and pre-modeled inputs, a data lake lets you define structure later, at query time, known as a schema-on-read approach.

This flexibility makes data lakes ideal for advanced analytics, AI, and data science use cases.

For instance, a fintech team might dump a mix of structured and unstructured data like logs from payment gateways, transaction histories, and compliance checks into one data lake, then run machine learning models directly on that combined data set.

Key Characteristics of Data Lakes

While the basic idea is simple, effective data lakes share a few defining traits.

Scalability and Flexibility

A modern data lake must scale with your volumes of data and allow quick adjustments when workloads spike.

Cloud storage options like Amazon S3 or Google Cloud Storage make it practical to store petabytes without massive upfront cost.

For growing fintech firms, this scalability allows teams to start lean and expand as transaction volume increases, something we often advise clients at Trio to plan for early, as it’s a lot less expensive to set things up for scalability from the start than it is to alter existing systems or transfer later.

Schema-on-Read Architecture

The schema-on-read model gives data engineers freedom.

You load data as it is, then apply structure later based on the question you’re trying to answer.

It’s an elegant solution for complex systems, especially when you’re pulling from diverse data sources.

A compliance analyst and a marketing analyst can both use the same underlying data differently, without one having to wait for the other’s pipeline to finish.

Multi-Format Data Storage (Structured, Semi-Structured, Unstructured)

You can store structured data like SQL tables, semi-structured formats such as JSON, and unstructured data like voice recordings or PDFs.

This matters because valuable insights rarely come from a single data type.

In the financial services industry, for example, combining transactional data with customer data and even support logs can uncover patterns that drive fraud prevention or customer retention.

Benefits of Using a Data Lake

A data lake is largely about accessibility and potential. Done right, it becomes the heartbeat of your organization’s data architecture.

Centralized Storage and Accessibility

A data lake consolidates everything into a single source of truth.

Instead of scattered spreadsheets, siloed databases, and shadow dashboards, you get a unified foundation for business intelligence.

It’s not uncommon for a company that implements a data lake to find hidden correlations between departments, say, how customer support volume tracks against payment failures.

Enhanced Data Democratization

Once the lake is built, access changes everything.

Tools like Power BI and Databricks allow analysts across departments to explore real-time data safely, without waiting on IT bottlenecks.

It may sound idealistic, but seamless data democratization can reshape company culture.

We’ve noticed fintech teams work faster when they can test ideas directly instead of queuing requests for data pulls.

Cost Efficiency and Elastic Scalability

Cloud-based data lakes are inherently elastic. You pay for the storage and computing you use.

This makes them far more cost-efficient than traditional systems that require fixed resources.

Pair that with open formats such as Parquet or Delta Lake, and you gain the flexibility to integrate with any analytics engine later.

Real-Time Data Analytics Explained

Real-time analytics gives you speed. Together with the depth provided by vast amounts of data, they enable continuous awareness of what’s happening inside your business as it unfolds.

What Is Real-Time Analytics?

In simple terms, real-time analytics means analyzing and acting on data the moment it’s created.

Think of a credit card transaction being flagged for fraud as it happens, not an hour later.

Behind the scenes, streaming platforms like Kafka or Kinesis feed this live data into your analytical systems, where rules or models trigger instant outcomes.

It’s not always easy to achieve, though. The infrastructure must be carefully designed to minimize latency and maintain accuracy.

But when done right, real-time processing can turn your operations from reactive to predictive.

The Importance of Real-Time Data for Business Decision-Making

When teams can access real-time data, they make smaller, faster decisions that compound over time.

A lending platform might adjust interest rates based on minute-to-minute market data. A fraud monitoring tool can interrupt suspicious transactions midstream. Even a customer support chatbot might switch tone if sentiment analysis detects frustration in real time.

These may seem like small interactions, but together they define how a company competes.

Core Use Cases Across Industries

There are many use cases of real-time data analysis right now.

Financial Services and Trading Platforms

In financial markets, firms rely on real-time analytics to monitor trades, manage exposure, and prevent cascading losses.

By feeding transactional data into a data lake, analysts can simulate risk scenarios or refine models continuously.

This mix of historical data and stream processing keeps both traders and regulators confident in the numbers.

E-Commerce and Customer Personalization

E-commerce companies apply similar logic but in more human ways.

They track clicks, cart activity, and purchase patterns to adjust recommendations instantly. It’s the reason you see relevant suggestions before finishing checkout.

A data lake helps tie these interactions to past behavior, creating context that drives retention.

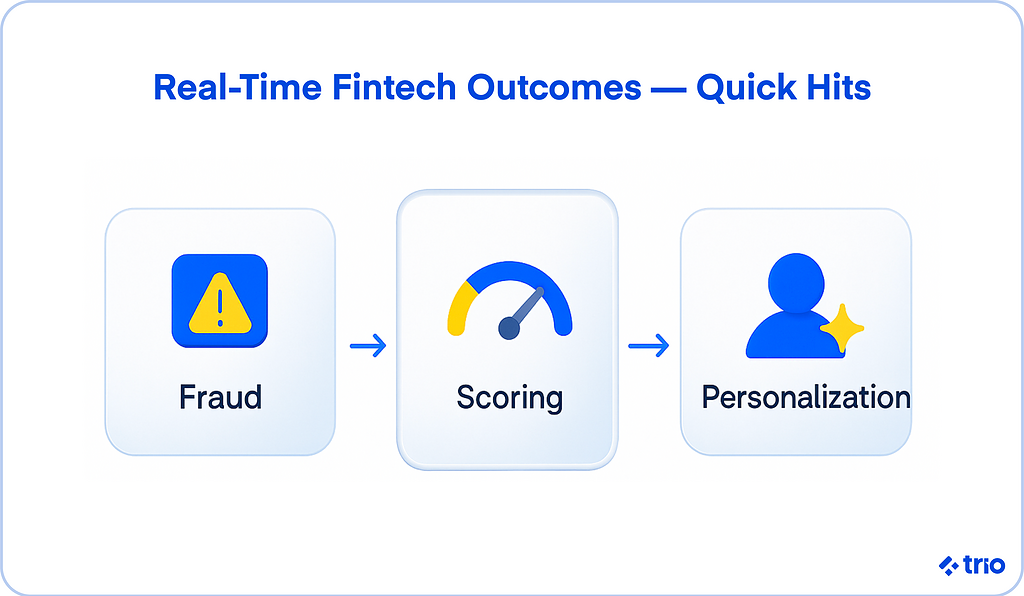

Fraud Detection and Risk Scoring

Fraud detection thrives on velocity. The faster your models analyze data generated by transactions, the more accurate the results.

Machine learning applied on top of real-time data lakes enables adaptive scoring systems that get sharper with every event, identifying patterns too subtle for static systems to catch.

IoT and Sensor-Based Monitoring

Industries that depend on sensors, from logistics to manufacturing, also depend on timing.

A delayed alert can mean a lost shipment or an equipment failure.

Integrating IoT streams into your data lake allows operators to analyze signals as they arrive and trigger maintenance or rerouting automatically.

Integrating Real-Time Data Into Data Lakes

Adding real-time data to your data lake sounds straightforward, but doing it right requires thoughtful data engineering.

A live stream of events is only valuable if the pipeline behind it can handle speed, reliability, and structure simultaneously.

This is one of the many reasons you should hire a data integration expert familiar with the nuances of your industry.

Key Data Engineering Principles

The foundation of any real-time system is its design.

You’re essentially building highways for data, routes that must stay open and fast, even during rush hour.

Data Ingestion and Stream Processing

Every second, applications, sensors, and APIs generate data from various sources. Capturing this flow efficiently is the first challenge.

Platforms like Apache Kafka, Amazon Kinesis, or Google Pub/Sub can handle millions of records per second.

They act like central nervous systems, ensuring your streams stay consistent and recover quickly if something breaks.

Good stream processing means more than speed.

It includes filtering duplicates, validating schema, and enriching messages before they’re written into your data lake.

We’ve seen fintech teams significantly reduce detection times for fraud simply by improving how streams are pre-processed and partitioned.

Data Transformation and Enrichment

Once the data lands, it often needs cleaning or context. That’s where transformation comes in.

Using Apache Spark Streaming or Databricks Structured Streaming, you can normalize data, merge related events, or add external references like exchange rates or risk categories.

Of course, you could also create your own platform, but this isn’t realistic for most smaller teams.

This stage adds intelligence.

For example, a payment transaction becomes more meaningful when linked to a customer profile and merchant record.

You’re not just storing events anymore; you’re preparing advanced analytics that can feed AI and predictive modeling later on, powered by incredible tools like Python.

Data Orchestration and Workflow Automation

Even the most elegant pipelines fail without orchestration.

Tools like Airflow, Prefect, or Dagster coordinate how jobs run, monitor dependencies, and recover gracefully from errors. They help you manage data automatically so humans don’t have to intervene every time something lags.

Strong orchestration also supports data governance by tracking where data came from and what transformations occurred. In regulated environments like fintech, this kind of lineage tracking isn’t just helpful, it’s mandatory.

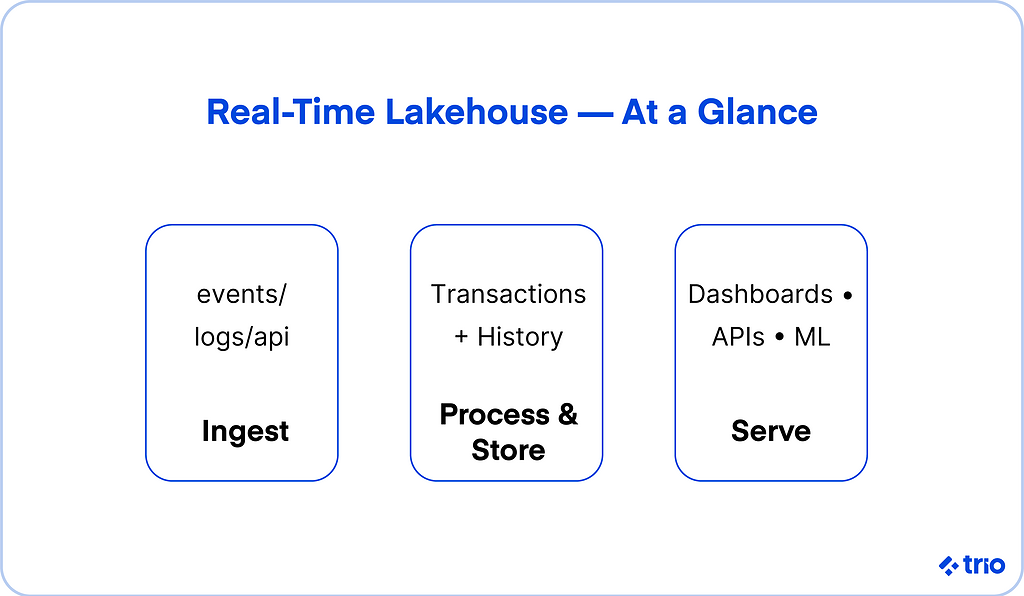

Building a Real-Time Data Pipeline

Once you understand the principles, you can start assembling the moving parts. Think of it as a three-stage cycle that never stops running.

Step 1: Capture and Stream (Kafka, Kinesis, Pub/Sub)

At the capture layer, your systems record real-time data as it’s generated, payment approvals, IoT signals, API calls, you name it.

Kafka and Kinesis are reliable here because they ensure each message arrives exactly once, preserving accuracy for financial workloads where duplication could have serious consequences.

Step 2: Process and Store (Delta Lake, Snowflake, BigQuery)

Next comes storage. Modern frameworks like Delta Lake, Snowflake, and Google BigQuery merge batch and stream capabilities, so you can query live and historical data together.

Delta Lake, in particular, enforces ACID transactions, meaning updates are consistent and reversible.

For fintech systems that must stay audit-ready, that’s a major advantage.

Step 3: Analyze and Visualize (Databricks, Power BI, Looker)

Finally, insights surface through analytics and visualization tools. Databricks, Power BI, and Looker make it easier to create real-time dashboards that help teams act quickly.

A fraud analyst might see anomalies seconds after they occur. An operations team could watch loan applications flow through pipelines and spot bottlenecks instantly.

Overcoming Integration Challenges

There’s no denying that bringing all this together can get messy.

Real-time systems introduce new risks, latency, data quality, and compliance issues among them.

But each challenge can be managed with planning and the right tools.

Latency Reduction and Event Consistency

Latency often hides in places you don’t expect: network hops, inefficient serialization, or heavy joins during stream processing.

Optimizing these paths, using smaller windowing intervals, or caching intermediate states can drastically improve responsiveness.

Consistency is another hurdle. Financial applications, for example, can’t tolerate double entries or missing events.

To prevent this, streaming frameworks use “exactly-once” semantics, where each record is processed only once, even during retries.

Data Quality and Governance in High-Velocity Streams

When you’re dealing with a constant flow, errors multiply quickly. A single malformed transaction could distort a dashboard within seconds. That’s why modern data teams rely on automated data validation and metadata monitoring. Some even use AI to detect anomalies in the pipeline itself, flagging suspicious changes in schema or sudden drops in data volume.

Strong data governance policies must sit at the heart of this system. That includes logging, traceability, and clear accountability for every process touching financial data.

Analytics and AI in Real-Time Data Lakes

Once your data lake is capturing and processing data continuously, it’s time to put it to work. AI adds the next layer of intelligence, giving you predictions and decisions in real time instead of static reports.

How AI Enhances Real-Time Analytics

AI thrives on fresh data. With a real-time data lake, machine learning models can learn and adapt continuously. In fintech, this can mean identifying unusual spending patterns or adjusting credit risk assessments before a transaction finalizes.

Predictive Modeling and Machine Learning on Streaming Data

Streaming data means that, instead of retraining overnight, systems use incremental learning, constantly updating based on the latest information.

For a lending startup, this might mean adjusting credit scores as customers build new spending habits.

Automated Anomaly Detection and Forecasting

AI-powered analytics platforms and detection models can notice irregularities long before humans do.

Maybe a payment gateway suddenly sees a spike in failed transactions, or sensor readings drift outside normal ranges.

With real-time processing, those patterns trigger alerts instantly.

Natural Language Processing for Real-Time Insights

Some analytics systems now use natural language queries so teams can ask questions conversationally: “What regions are showing higher loan defaults this week?”

Tools like these lower the barrier to data access, allowing non-technical staff to extract insight from real-time analytics without touching code.

The Role of Big Data in Modern Analytics

All of this rests on the backbone of big data and how it’s processed.

From Batch to Stream Processing

Traditional batch processing still has its place, but it’s too slow for scenarios that demand immediate action.

Stream processing complements it, analyzing raw data as it flows in.

The result is a hybrid environment where historical trends and live updates coexist.

Distributed Systems and Parallel Computation

Underneath the surface, distributed frameworks like Spark and Databricks handle the heavy lifting.

They divide workloads across nodes, making it possible to compute terabytes of data in parallel.

This structure supports advanced analytics at the scale modern fintech demands.

Transforming Raw Data Into Actionable Insights

Having data isn’t enough; you need to make it usable.

The Data Lifecycle: From Collection to Consumption

The data lifecycle starts with collection, then moves through cleaning, enrichment, and delivery.

When this process is automated, decision-makers always have access to the latest information.

Maintaining good data governance across this flow ensures accuracy and compliance.

Data Visualization and Decision Enablement

Visual tools, like dashboards built on Power BI or Looker, give executives a real-time view of operational performance.

They can see revenue trends, detect anomalies, or evaluate business intelligence metrics without waiting for manual reports.

Automation and Continuous Intelligence

Automation allows for continuous intelligence, systems that learn and respond automatically.

For instance, a fintech firm could adjust pricing models or capital reserves as market data changes.

Our expert fintech developers often help clients architect this kind of setup, ensuring it scales cleanly as data volumes grow.

The Role of APIs in Delivering Real-Time Insights

APIs connect everything and have quickly become an essential part of leveraging data for real-time insights.

They make it possible for data lakes, apps, and dashboards to share insights instantly.

Without APIs, you’d be limited to traditional data movement like static exports. With them, you can push insights into any system, from CRMs to trading bots.

Implementing Real-Time Data Lakes in Financial Services

What does all of this look like practically for a company in the fintech sector?

Why Financial Institutions Are Moving Toward Real-Time Data

Banks and payment companies are under constant pressure to act faster and stay compliant.

Traditional databases can’t keep up with high-velocity transactional data.

Implementing a data lake solves that by providing real-time visibility into risks, liquidity, and customer behavior, all while maintaining data protection and traceability.

Core Fintech Use Cases

- Fraud Detection and AML Monitoring: Real-time detection stops losses before they happen, analyzing behavior as transactions occur.

- Real-Time Credit Scoring: Instant access to spending and repayment data allows for dynamic credit scoring that reflects current conditions.

- Personalized Banking: AI models analyze user behavior to recommend savings plans, products, or tailored financial advice in real time.

Governance, Security, and Compliance in Data Lakes

When dealing with money and privacy, precision isn’t optional.

Strong data governance ensures compliance with frameworks like GDPR, CCPA, and PCI DSS.

It also defines who can access what, when, and how. Encryption, role-based access control, and audit logs are all standard.

Trio often helps fintech clients align data architecture with these regulations, balancing security with usability so developers can still move fast without breaking policy.

Future Trends in Financial Data Analytics

The next wave of real-time analytics is already forming around a few major shifts.

- Convergence of Data Lakes and Warehouses: Unified lakehouse architectures are eliminating the gap between flexibility and structure.

- AI-Native Data Engineering: Pipelines that tune themselves, reallocate compute, and self-heal are becoming standard.

- Decision Intelligence Platforms: Real-time systems that combine AI, machine learning, and automation to assist or replace manual decisions.

- Open Data Standards: Formats like Parquet and Iceberg are promoting interoperability, allowing fintech companies to mix and match the best tools for their needs.

Conclusion

Real-time data lakes have become a big part of staying competitive.

When you combine AI, big data, and real-time analytics, you gain the ability to act on information while it still matters.

For fintechs, that can mean catching fraud instantly, offering loans faster, or personalizing services at scale.

At Trio, we see it every day: the companies that learn to harness their data in real time are the ones that set the pace for the future of finance.

We have specialists on hand who are not only able to help you set up your data lakes but also build and integrate secure and compliant analysis tools.

To hire fintech developers with Trio, get in touch for a free consultation.

FAQs

What is a real-time data lake?

A real-time data lake is a central repository that stores raw, streaming data and makes it available for analysis instantly.

It combines large-scale data storage with real-time analytics to support fast, data-driven decisions.

How does a data lake differ from a data warehouse?

A data lake differs from a data warehouse because it stores all data types, structured and unstructured, without predefined schemas.

It’s more flexible and better suited for real-time analytics and AI-driven insights.

Why is real-time analytics important for fintech companies?

Real-time analytics is important for fintech companies because it helps detect fraud, assess credit risk, and personalize user experiences instantly.

In financial services, acting seconds faster can prevent major losses.

What technologies are used in real-time data lakes?

Technologies used in real-time data lakes include Apache Kafka, AWS Kinesis, Delta Lake, Databricks, and Power BI.

These tools handle streaming, storage, processing, and visualization of massive data flows efficiently.